Today I'm goint to take a look at games with multiple equilibria (which includes poker) and discuss the implications of multiple equilibria on GTO strategies. Because this is a large topic I'll be breaking this discussion into two separate posts.

Understanding games with multiple equilibria is important, because in practice most reasonably complex games have more than one equilibrium and in many cases some equilibria are superior to others. Because of this, understanding the spectrum of equilibria and their properties in depth is an important part of game theory.

Understanding games with multiple equilibria is important, because in practice most reasonably complex games have more than one equilibrium and in many cases some equilibria are superior to others. Because of this, understanding the spectrum of equilibria and their properties in depth is an important part of game theory.

Coordination Games

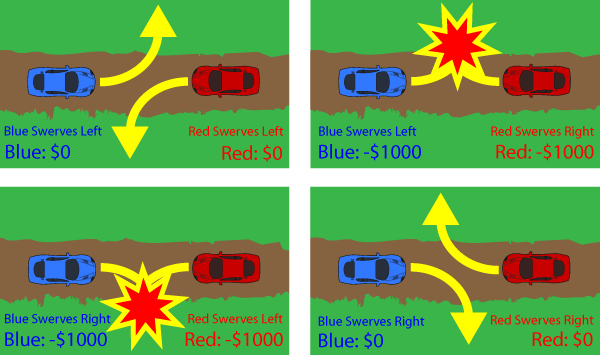

Coordination games are the simplest examples of games with multiple equilibria. I'll walk through a simple example that is sometimes called the "swerving game".

Imagine you are speeding along a narrow country road. You come over a steep hill and realize another car is coming straight at you and you are both driving in the center of the road. Furthermore, you are both going fast enough that there is no time to break. If you both swerve to the right you will pass each other and avoid an accident. Similarly if you both swerve to the left there will be no accident. In both these cases your payoff is $0. However, if you swerve to your right and the other driver swerves to his left, or if you swerve to your left and the other driver swerves to his right you will crash and both incur $1,000 of expenses.

It turns out that this game actually has three equilibria. To see this just recall the definition of a nash equilibrium. Suppose both players strategy is to swerve to their right. Clearly neither player would want to deviate as that would cause a crash so the strategy pair where both players always go right is an equilibrium. Similarly if both players always swerve to their left that is an equilibrium as well. Both of these equilibrium outcomes give each player $0 in EV.

The third equilibrium is more subtle. Imagine playing this game against a player who randomly goes left half the time or right half the time. In this case no matter what strategy you employ, your EV is -$500, so all strategies are a best response to your opponents actions. This means that both drivers swerving right 50% and left 50% is also an equilibrium, however the EV of this equilibrium is much worse for both players. On average both players lose $500 in EV if they play this equilibrium.

Obviously in this situation everyone would be much better off if they could coordinate and agree in advance that any time they encounter such a situation they will pick one of the pure equilibrium strategies (always go right or always go left) and always play it.

In this game always going right or always going left are both "GTO" strategies in the sense that in isolation they are something a person playing optimally might do, however an equilibrium is a condition on the set of strategies that both players are using, not on a specific players strategy in isolation. If you were in a country where people usually drive on the right, for example, such that society had coordinated on a specific equilibrium, deciding to play the strategy where you always go left would still be "GTO" technically, but in practice it would be a bad idea.

Furthermore, if you ended up in a world where somehow everyone was playing the mixed strategy equilibrium that would be terrible for everyone, even though theoretically speaking everyone would be playing GTO. Some theorists even argue that social and cultural norms often emerge to solve coordination problems by establishing an implicit agreement on which equilibria to play.

In general, most complex games have many equilibrium and some may be better for a particular player than another at his opponents expense, or some may just be better or worse for everyone as in the swerving game. There are a variety of ways of categorizing and classifying equilibria and trying to predict which ones are more likely to be played in practice than others (for example in the swerving game the mixed strategy equilibrium is not evolutionarily stable and thus is not something I'd expect to ever encounter in real life) but overall differentiating "good" equilibrium from bad ones and trying to predict which equilibrium outcomes will occur is a very complex problem.

Zero Sum Games to the Rescue (sort of)

In zero sum two player games (like heads up poker situations) life is a bit simpler thanks to a key theorem. In two player zero sum games, while their may be many different equilibrium strategies, it is the case that when both players play equilibrium strategies their EV is the same, no matter which equilibrium strategy either player chooses. Thus if a single player is playing a GTO strategy in isolation his EV is guaranteed no matter what his opponent does. There are no good or bad equilibrium for either player if both players stick to GTO play.

However, there still are many situations where certain GTO strategies perform better against all non-optimal opponents or against specific non-optimal opponents. To see this, lets start by looking at a simple example of a slight variant of the [0,1] game from The Mathematics of Poker (which is a variant of a much older game solved by von Neumann and Morgenstern). The game works as follows:

It turns out that GTO play for the betting player is to bet the top of his range for value (all hands stronger than some value v) and the bottom of his range as a bluff (all hands worse than some value b). The calling player than calls with the top of his range (all hands stronger than some value v).

It is relatively straight forwards to solve for the exact optimal values which are shown below.

This is a GTO solution to this game, and in this case I think it is quite clear that this is the best GTO solution, however, it turns out that while there is a unique GTO strategy for the betting player, there are infinitely many GTO strategies for the caller in this game and thus infinitely many equilibria.

To see why, just consider Player 2's decision when he holds a hand between 1/9 and 7/9 and his opponent bets. Against a GTO opponent these hands all have exactly the same EV for both calling and folding. Furthermore, a value bet is only more profitable than a check for Player 1 with a hand of strength x if the x beats at least half of the opponents calling range.

Suppose that rather than calling with all hands better than 5/9, the Player 2 instead calls with all hands better than 6/9 as well as hands between 4/9 and 5/9 and folds everything else. Player 2's EV is exactly the same in this situation and for Player 1, any hand of strength less than v still looses to more than half of the callers calling range so there is no incentive for him to alter his value betting. Furthermore, Player 2 is calling and beating bluffs with the exact some frequency so there is no reason for Player 1 to alter his betting strategy.

Thus we have another GTO strategy for Player 2, and it is easy to see that infinitely many similar strategies that shift the bottom of Player 2's calling range are all also GTO.

Now we know that against a GTO opponent both of these GTO strategies for player 2 have equal EV, but what about against a sub-optimal player 1 who value bets too wide with all hands stronger than 5/9 (instead of the GTO cutoff of 7/9) and bluffs according to GTO with hands less than 1/9. Call him SubOptimal1.

The EVs are equal in all cases, except when SubOptimal1 has a hand between 5/9 and 6/9 and player 2 has a hand between 4/9 and 6/9. In this case the GTO strategy where player 2 calls with hands 5/9 and better ends up calling half the time and winning half the time when he calls so his EV in this situation is one quarter of the pot, or 25 chips.

The alternate player 2 GTO strategy that calls with 4/9-5/9 and folds 5/9-6/9 ends up calling half the time and always losing a pot sized bet when he calls for an EV of -50 chips. That's a 75 chip EV difference for player 2 between these two GTO strategies against SubOptimal1.

The probability of the situation where SubOptimal1 has a hand between 5/9 and 6/9 and player 2 has a hand between 4/9 and 6/9 occurring is 1/9 * 2/9 = 2/81 so the overall EV difference between the two strategies is 150/81.

Despite the fact that both strategies are GTO and must perform the same against an optimal opponent, against sub-optimal opponents different GTO strategies can perform quite differently.

In this case one GTO strategy is clearly much better than the other as there is no reason to ever call with a weaker hand over a stronger hand if raising is not an option. The GTO strategy that calls with all hands better than 5/9 will have an equal or higher EV against all possible opponent strategies. However as we'll see in part 2 of this post (coming soon) there are plenty of situations where there are two or more equilibrium strategies all of which are better at exploiting certain types of fish and worse at exploiting other types of fish.

This post continues in part 2.

However, there still are many situations where certain GTO strategies perform better against all non-optimal opponents or against specific non-optimal opponents. To see this, lets start by looking at a simple example of a slight variant of the [0,1] game from The Mathematics of Poker (which is a variant of a much older game solved by von Neumann and Morgenstern). The game works as follows:

- There is a pot of 100 chips and both players have 100 chip stacks

- Both players are dealt a single card which has a number randomly chosen from between 0 and 1 (inclusive)

- Player 1 can bet 100% pot or check. Player 2 can call or fold if player 1 bets, if player 1 checks then he must check back

- Higher numbered cards win at showdown

I walk through how to solve this game in detail in The Theory of Winning Part 2 so I will just gloss over the solution to the game in this post.

It turns out that GTO play for the betting player is to bet the top of his range for value (all hands stronger than some value v) and the bottom of his range as a bluff (all hands worse than some value b). The calling player than calls with the top of his range (all hands stronger than some value v).

It is relatively straight forwards to solve for the exact optimal values which are shown below.

This is a GTO solution to this game, and in this case I think it is quite clear that this is the best GTO solution, however, it turns out that while there is a unique GTO strategy for the betting player, there are infinitely many GTO strategies for the caller in this game and thus infinitely many equilibria.

To see why, just consider Player 2's decision when he holds a hand between 1/9 and 7/9 and his opponent bets. Against a GTO opponent these hands all have exactly the same EV for both calling and folding. Furthermore, a value bet is only more profitable than a check for Player 1 with a hand of strength x if the x beats at least half of the opponents calling range.

Suppose that rather than calling with all hands better than 5/9, the Player 2 instead calls with all hands better than 6/9 as well as hands between 4/9 and 5/9 and folds everything else. Player 2's EV is exactly the same in this situation and for Player 1, any hand of strength less than v still looses to more than half of the callers calling range so there is no incentive for him to alter his value betting. Furthermore, Player 2 is calling and beating bluffs with the exact some frequency so there is no reason for Player 1 to alter his betting strategy.

Thus we have another GTO strategy for Player 2, and it is easy to see that infinitely many similar strategies that shift the bottom of Player 2's calling range are all also GTO.

Now we know that against a GTO opponent both of these GTO strategies for player 2 have equal EV, but what about against a sub-optimal player 1 who value bets too wide with all hands stronger than 5/9 (instead of the GTO cutoff of 7/9) and bluffs according to GTO with hands less than 1/9. Call him SubOptimal1.

The EVs are equal in all cases, except when SubOptimal1 has a hand between 5/9 and 6/9 and player 2 has a hand between 4/9 and 6/9. In this case the GTO strategy where player 2 calls with hands 5/9 and better ends up calling half the time and winning half the time when he calls so his EV in this situation is one quarter of the pot, or 25 chips.

The alternate player 2 GTO strategy that calls with 4/9-5/9 and folds 5/9-6/9 ends up calling half the time and always losing a pot sized bet when he calls for an EV of -50 chips. That's a 75 chip EV difference for player 2 between these two GTO strategies against SubOptimal1.

The probability of the situation where SubOptimal1 has a hand between 5/9 and 6/9 and player 2 has a hand between 4/9 and 6/9 occurring is 1/9 * 2/9 = 2/81 so the overall EV difference between the two strategies is 150/81.

Despite the fact that both strategies are GTO and must perform the same against an optimal opponent, against sub-optimal opponents different GTO strategies can perform quite differently.

In this case one GTO strategy is clearly much better than the other as there is no reason to ever call with a weaker hand over a stronger hand if raising is not an option. The GTO strategy that calls with all hands better than 5/9 will have an equal or higher EV against all possible opponent strategies. However as we'll see in part 2 of this post (coming soon) there are plenty of situations where there are two or more equilibrium strategies all of which are better at exploiting certain types of fish and worse at exploiting other types of fish.

This post continues in part 2.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.